Lawyers and legal professionals often need to compare documents like contracts and agreements, particularly during negotiations or before signing an execution version of a contract. As it’s critical to find changes quickly and accurately, you can’t rely on time-consuming and error-prone manual reviews. There are free tools like Microsoft Word Compare, but these aren’t powerful enough for legal professionals who need the highest speed and accuracy when running complex document comparisons. As a result, most legal professionals are now using specialist tools like Draftable Legal to compare documents (read more about Draftable Legal vs Microsoft Word Compare for legal document comparison).

However, we’re always interested in exploring what’s possible with the latest technology. ChatGPT, which uses Large Language Models (LLMs) developed by OpenAI has shown previously unimaginable performance when analysing and generating human-like text. However, it has also become infamous for its factual errors - known as hallucinations. This is a major concern for legal professionals who require the highest degree of accuracy in their work.

With recent studies showing hallucinations occur in one out of every 6 legal questions and that even the best-performing LLMs cannot be relied on to answer legal questions we wanted to ask: Is ChatGPT reliable enough for legal professionals in document comparison? We evaluate its performance (including testing for hallucinations) and share our findings in this guide on using ChatGPT to compare two documents, including its capabilities, limitations, best practices and prompts.

How to use ChatGPT to compare two documents?

If you’re using the free version of ChatGPT (GPT-3.5), you can extract the text you want to compare, paste it into the chat interface, and request a comparison.

If you’re using the paid version (GPT-4 and GPT-4o), you have the additional option to upload a variety of document types for comparison. ChatGPT will analyse the text and provide a high-level overview of the differences in document versions.

Note: You should never share confident client data or your company’s intellectual property if you are using the free version of ChatGPT, as any data you upload to this model is not private or secure. You should also be wary of uploading confidential data to paid ChatGPT accounts, as while OpenAI does not use uploaded data in paid accounts for model training, it may still contravene your firm’s policies. You should always check your internal policies before using any AI tool and general best practice is to anonymise or redact confidential data before using ChatGPT.

Document file types you can upload to ChatGPT for comparison:

- Microsoft Word Documents (.doc, .docx)

- PDF Files (.pdf)

- Excel Files (.xls, .xlsx)

- PowerPoint Files (.ppt, .pptx)

- Plain Text Files (.txt)

- Rich Text Format (.rtf)

- HTML Files (.html, .htm)

Testing ChatGPT’s performance

We tested both the free and paid versions of ChatGPT for document comparison, making 20 attempts to develop a good starting prompt and get an initial understanding of hallucination risk. The free version (GPT-3.5) was riddled with errors, even after using repeated and specific prompting. It was unable to identify text deletions or formatting changes such as bold or italics, and even hallucinated changes and text that didn’t exist.

We then tested the paid versions (ChatGPT-4 and ChatGPT-4o) and both were a noticeable improvement, but still required extensive prompting while producing inconsistent and inaccurate output. Both versions performed better when the documents were uploaded, rather than pasting text into the interface for comparison. Here are some of the key results:

How accurately does ChatGPT identify changes, and how much does it hallucinate?

- ChatGPT-4 identified 68% of changes correctly on each attempt, while ChatGPT-4o identified 71% of the changes correctly on each attempt.

- Both versions hallucinated on 86% of attempts, however, GPT-4o had a slightly higher number of hallucinations, averaging 1.42 hallucinations on each attempt, while GPT-4 averaged 1.28.

How reliable is ChatGPT in detecting changes?

Both versions gave different outputs on every attempt. For example, in some attempts, it would identify changes such as formatting changes, and then not identify it in the next attempt.

- They were both most consistent with identifying structural and formatting changes, with GPT-4 correctly identifying formatting changes 100% of the time and structural changes 86% of the time. GPT-4o correctly identified formatting changes 93% of the time and structural changes 71% of the time.

- Both versions were moderately consistent with deletions and additions, correctly identifying these changes an average of 61% of the time.

- They were both least consistent with identifying at-character level changes and correctly identified these in 35% of attempts.

Note: It’s important to reiterate we performed a small number of tests, and as both GPT versions gave such different outputs on each attempt, you would need a much larger sample size to glean any meaningful difference in the performance of GPT-4 and GPT-4o.

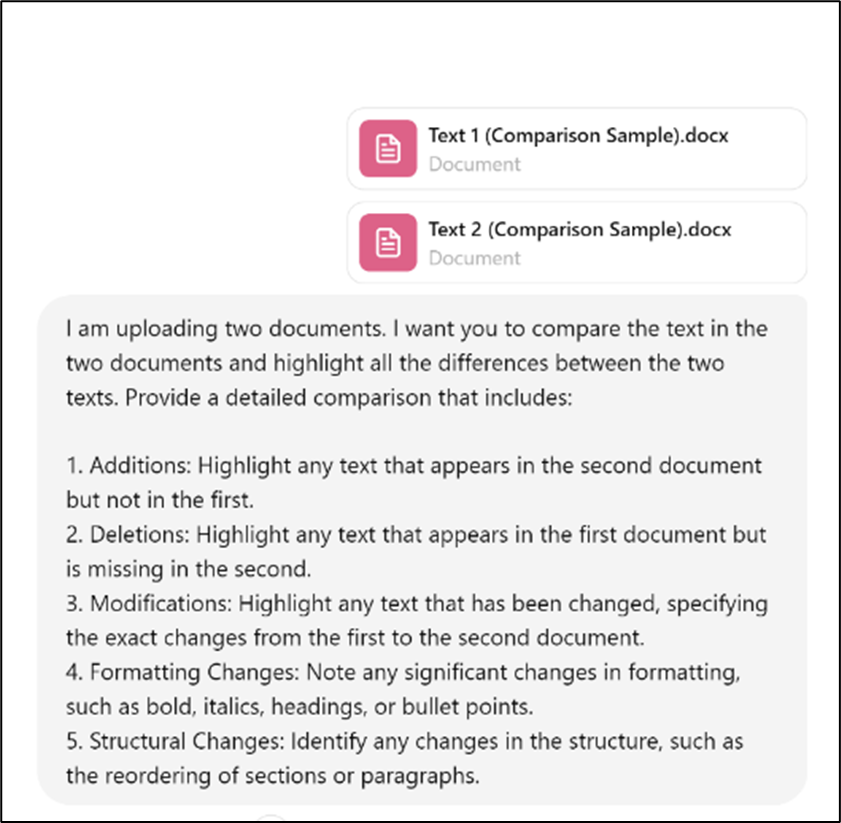

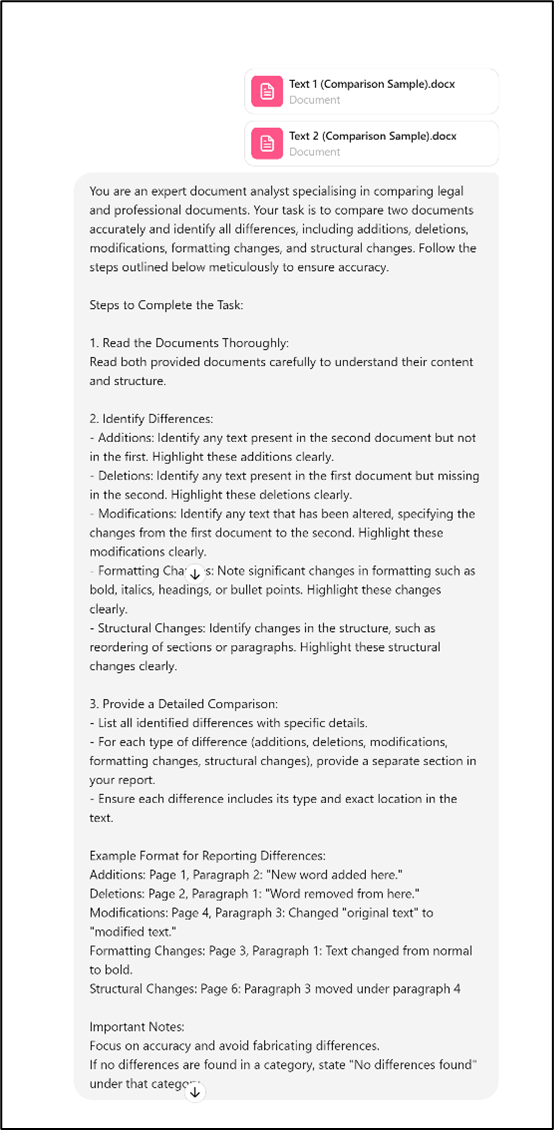

Prompts used to test ChatGPT in document comparison

We worked with our data scientists to build prompts using best practice prompting strategies as outlined in OpenAI's prompt engineering guide, including stating the role, step-by-step instructions, providing examples of outputs, and emphasising not to make up responses. However, we achieved more consistently accurate results with a simpler prompt. Here are the two prompts that generated the most accurate output (although they still produced multiple inaccuracies):

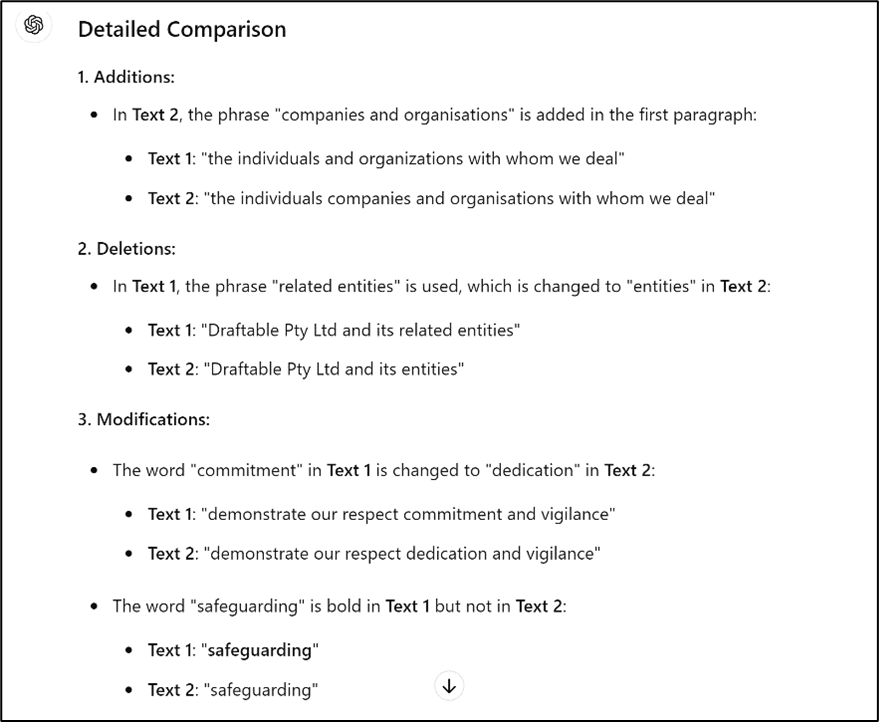

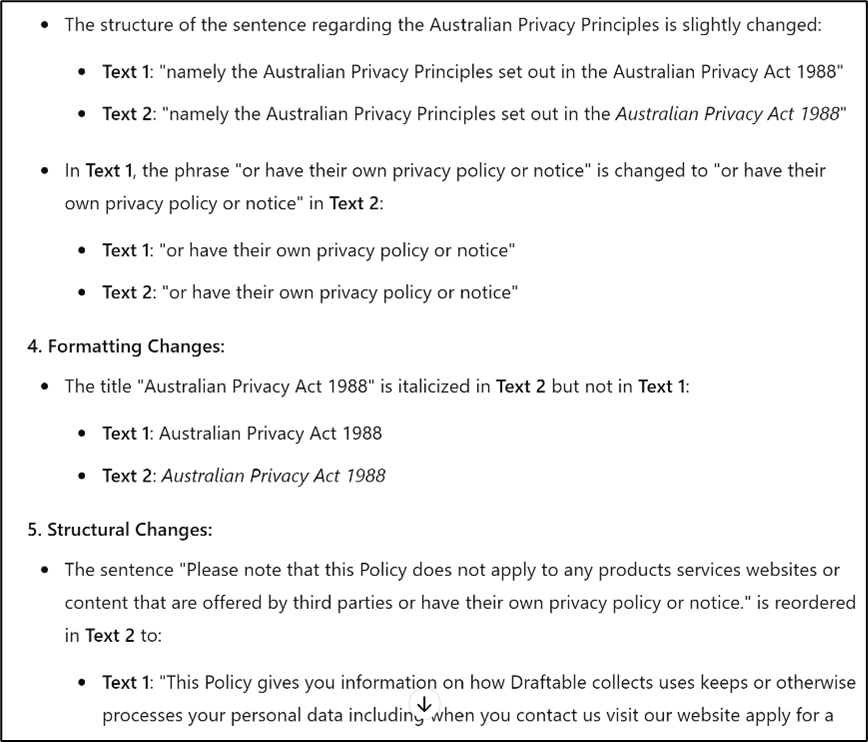

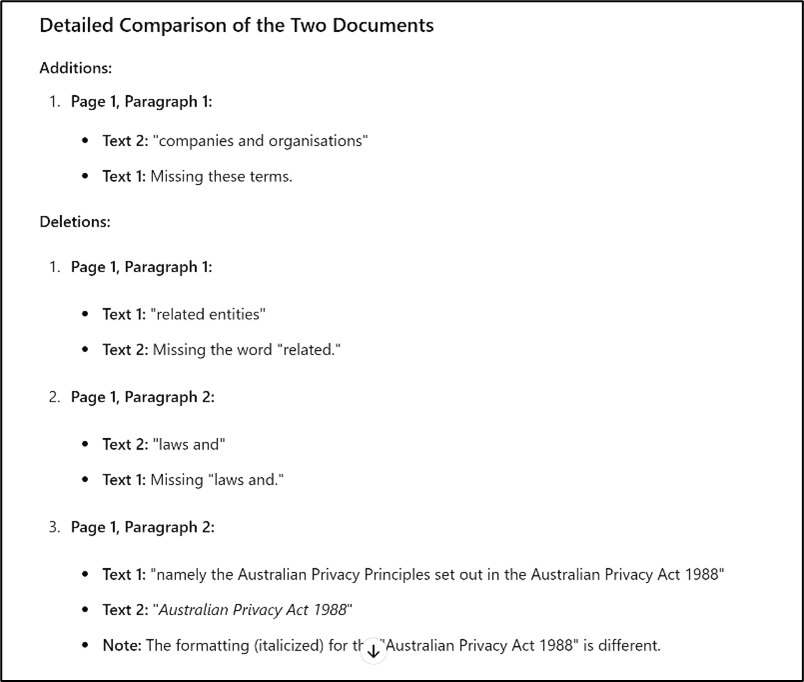

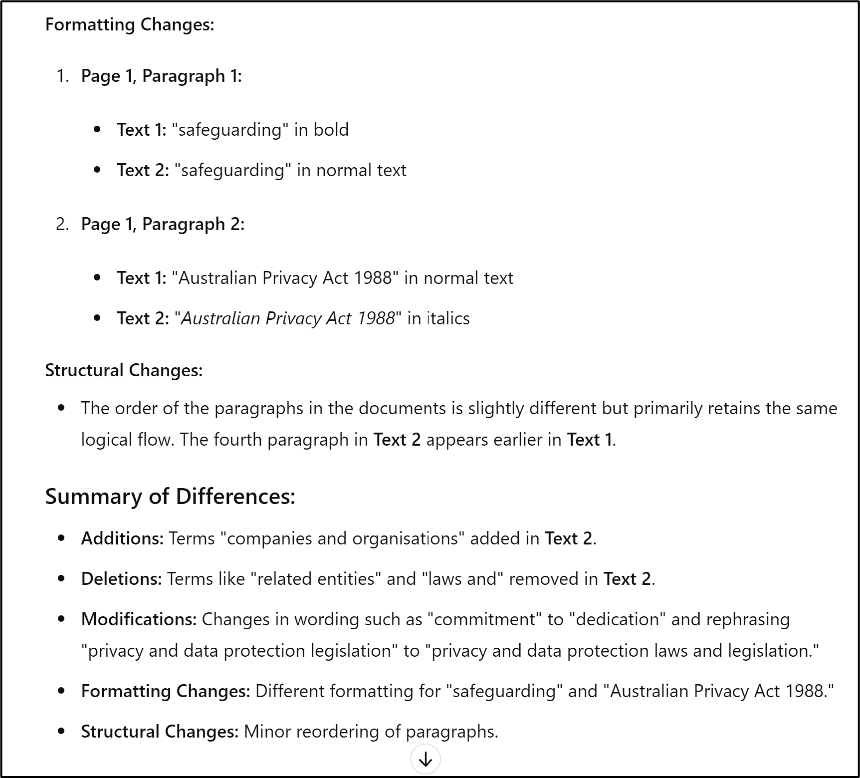

Simpler prompt and example output generated from this prompt

Extended prompt and example output generated from this prompt

Our findings after using ChatGPT’s AI to compare documents

While ChatGPT can technically compare two documents, there is an extremely high risk it will generate incomplete or incorrect output. We concluded that while ChatGPT may be able to compare very short, simple texts (such as a paragraph with minimal changes), it is not currently advanced enough to accurately compare any other texts or documents, especially in a legal setting.

ChatGPT does get better the more you use and prompt it, so if you still want to play around with ChatGPT for text comparison, here are some tips and best practices:

- Provide clear and detailed instructions: Ensure your prompts are specific and detailed to get the most accurate comparisons. You can include step-by-step instructions for the task, and clearly outline what changes you want ChatGPT to identify and how you’d like the changes to be presented. You can also continue prompting after the first output, with prompts such as “You did not identify all changes. I want you to identify all the changes between the two texts.”

- Review and validate outputs: A human will always need to review the outputs generated by ChatGPT and validate them against the original documents.

- Do not upload documents or text containing sensitive information: It’s important to protect sensitive client data when using AI tools like ChatGPT, as these tools share data with an external system. Only input generic or anonymised texts or documents.

Read more: Why using ChatGPT helps law firms prepare for a generative AI future

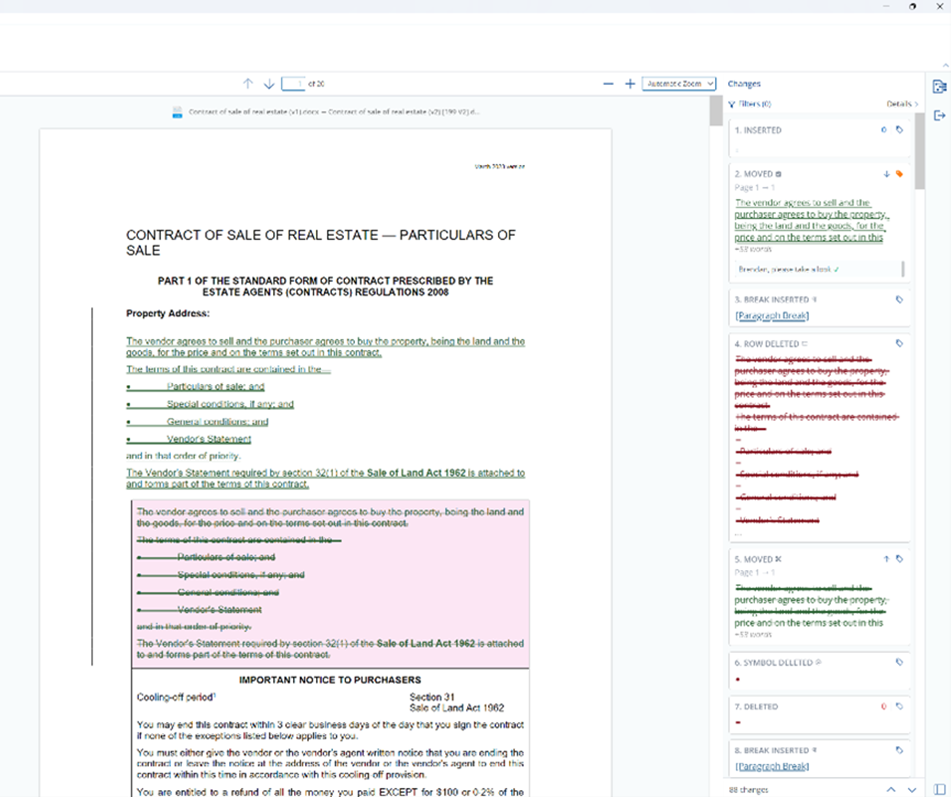

What is the best way to compare two documents?

If you’re a legal professional, you need a specialist document comparison solution that uses a rules-based comparison algorithm like Draftable Legal. It’s fast, accurate and reliable, and has all the legal workflow enhancing features you need, such as the ability to upload files and launch comparisons from wherever you are including your DMS and Outlook and run multiple comparisons at once with our Bulk Compare feature. You can even launch comparisons and email your comparison output with a single click. You simply upload your documents, customise your output preferences, and click compare. No prompting necessary.

With Draftable Legal you also get:

The ability to compare multiple file types:

- Word to PDF / PDF to Word

- Word to Word

- PDF to PDF (built-in OCR capability)

- PowerPoint documents

- Excel documents

- Text documents and free text

Multiple choices for comparison output:

- Word with tracked changes

- Word redline

- PDF redline (including changed pages only)

- Excel redline

- Side by side

Comprehensive integrations:

- MS Word and Outlook

- iManage Cloud / On-premises

- NetDocuments

- SharePoint Online (including multi-site browsing)

- Worldox

- Epona 365

Read more: How to track changes in contracts during the review and negotiation process

Here are some examples of Draftable Legal’s redline comparison output so you can see how easy it is to identify changes:

You can see all the features of Draftable Legal and how we stack up against other legal document comparison software in the tables below or click on this link to download a copy of the feature parity table.

.png)

.png)

Ready to switch to a specialist legal document comparison solution? Get in touch with our expert team or start your free five-day trial of Draftable Legal here.

.png)